Introduction to homework 1b. In-class lab for the homework.

Multilingual Natural Language Processing @ Sapienza

Home Page and Blog of the Multilingual NLP course @ Sapienza University of Rome

Wednesday, April 24, 2024

Lecture 14 (19/04/2024, 4h, TAs): homework 1b

Lecture 12 (12/04/2024, 3.5h): the Transformer

Introduction to the Transformer architecture. Encoder, decoder. Positional embeddings. Self-attention. Cross-attention. Decoding. Introduction to homework 1b.

Lecture 11 (11/04/2024, 2h): the attention mechanism

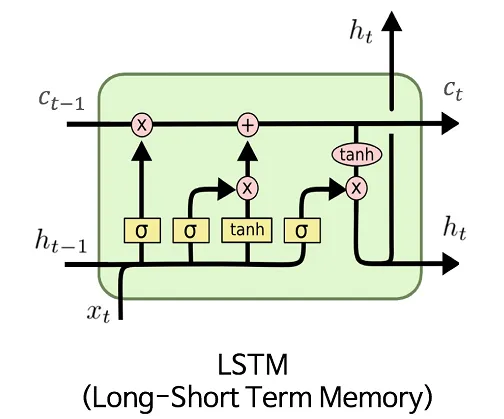

Neural language modeling. Context2vec. Neural language models with BiLSTMs. Contextualized word representations. Introduction to the attention.

Tuesday, April 9, 2024

Lecture 10 (05/04/2024, 4h): notebooks on real-world reviewing example, training in NLP, hyperparameters, LSTMs

Notebooks on real-world review classification example, Part-of-Speech tagging brief introduction, LSTMs recap, Notebook on Part-of-Speech Tagging with LSTMs, data preprocessing and training procedure best practices.

Friday, March 22, 2024

Lecture 8 (22/03/2024, 4h): introduction to language modeling

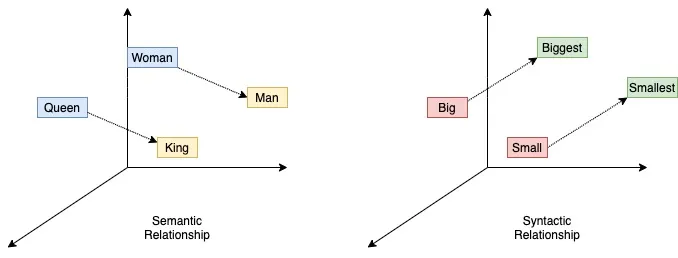

Negative sampling in the word2vec notebook. What is a language model? N-gram models (unigrams, bigrams, trigrams), together with their probability modeling and issues. Chain rule and n-gram estimation. Static vs. contextualized embeddings. Introduction to Recurrent Neural Networks.

Lecture 6 (15/03/2024, 4h): Negative sampling, homework 1 assignment

Negative sampling: the skipgram case; changes in the loss function. Homework 1 assignment.